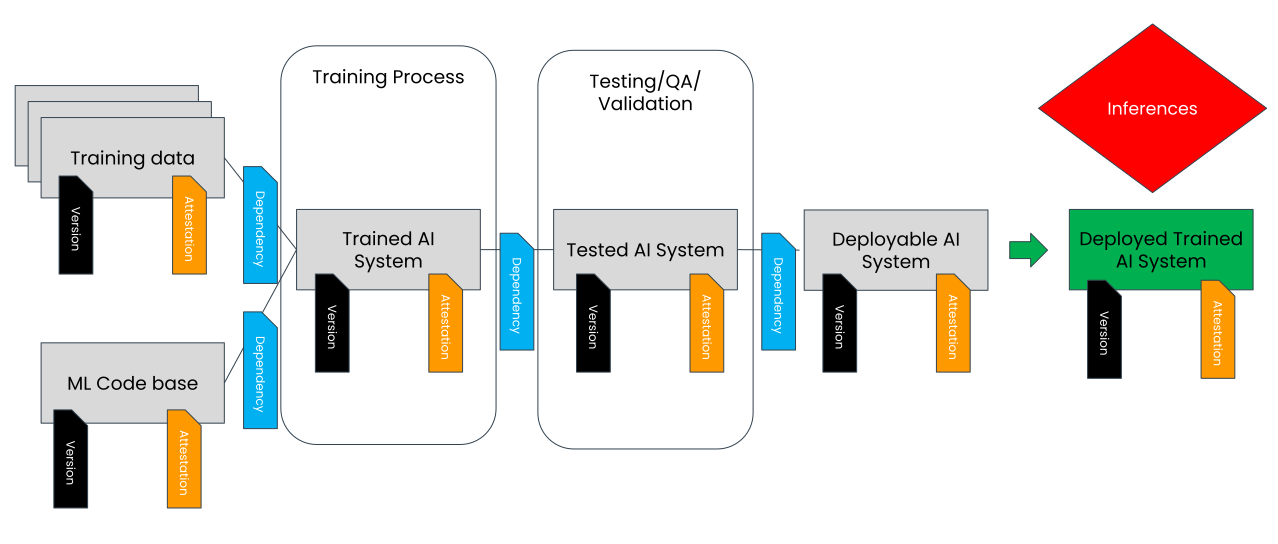

TAIBOM is an emerging standard to describe and manage AI systems and AI system risk. TAIBOM addresses the full AI supply chain, from training data through the results that AI systems produce.

Learn More About TAIBOM

Why TAIBOM?

To manage AI systems and their risk we need to know what version we are using, where it came from and how it's put together.

If we can't label, version and attest (be sure its what it says it is) the system we have no foundation for making higher level statements.. It's like building a house on sand. We need robust, cryptographically backed system descriptions before we can start.

TAIBOM addresses the technical challenge of providing formal descriptions of AI systems and dependencies. It additionally provides a method of describing and validating subjective claims about the qualities of these systems

Why the "T" ?

The "T" standards for "trustable" (not trustworthy). Trustable implies necessary but insufficent basis of for trust. TAIBOM is designed to interoprate with other AI trust measures such as vanilla AIBOM and model cards. TAIBOM adds the critial foundations needed to make trusworthines assessments.

Technical building blocks

TAIBOM is underpinned by the W3C verifiable credential interoperable standard, and provides:

- Identity: A formal method of naming, versioning and validating the constituent parts of an AI system

- Dependencies: A method of describing the (complex) system depenencies in AI. It describes how systems are made from parts and how one component has an implied risk dependency on another.

- Annotation: A method of annotating components and systems with security, licensing and broader attributes, including positive and negative risk implication

- Inferencing: A method of reasoning about the inherent risk in an AI system by transitively propagating risk

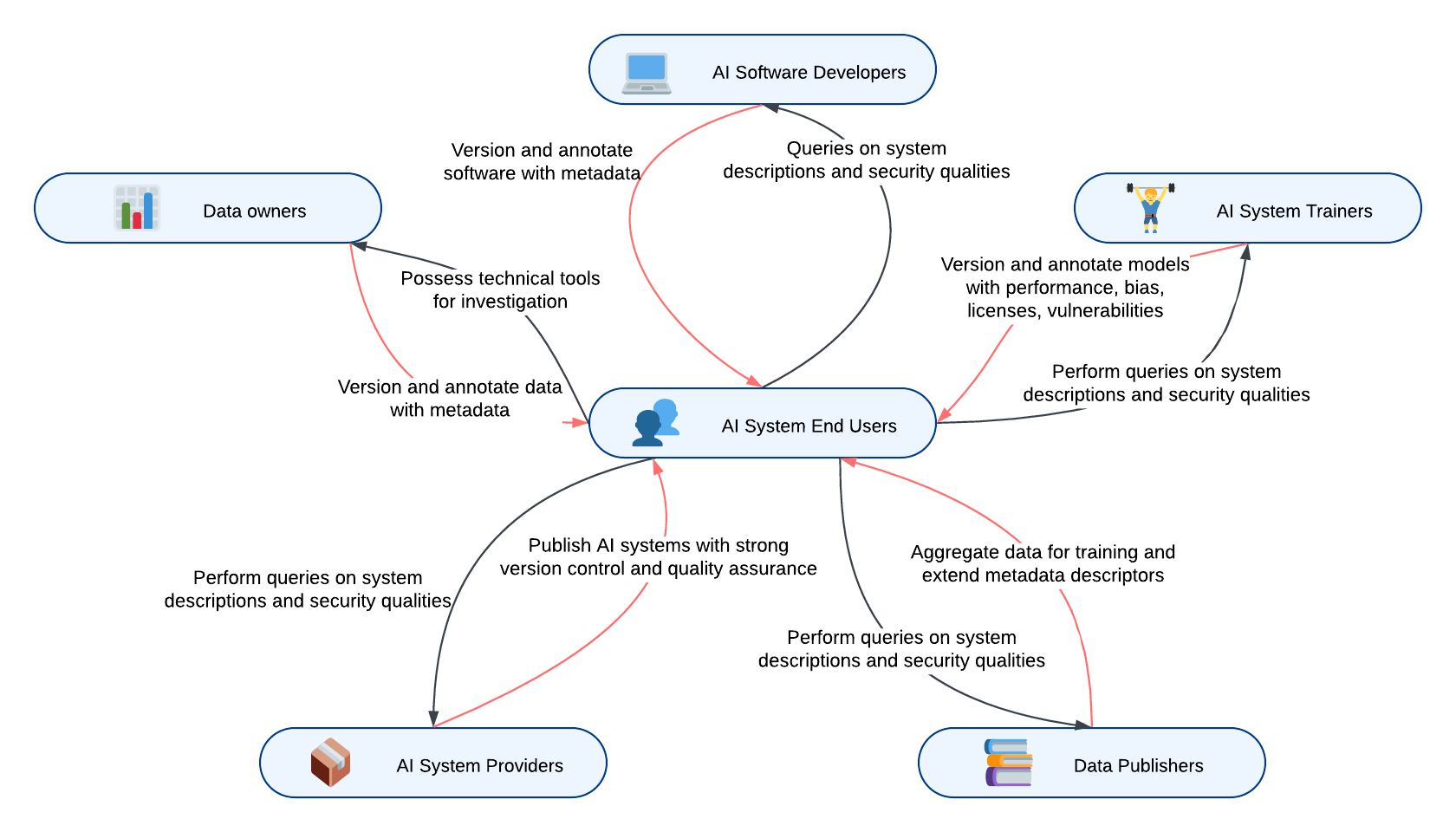

Who can use use TAIBOM

TAIBOM is distributed by nature, to reflect the complex supply chain of AI systems. Likewise the technology can be used by many stakeholders.

- Data owners: owners of the data on which AI systems are trained can version and annotate their data with things like copyright licenses

- AI software developers: can version the software, and annotate this software with licenses or vulnerabilities.

- AI system trainers: can version the trained models and annotate these systems with think like: performance metrics, bias metrics, licences or vulnerabilities

- Data publishers: can aggregate data for training, using or extending the meta data descriptors

- AI system providers: can publish AI systems with strong version control and extended meta data descriptors and quality assurance measures

But the main users are the AI system end users. With TAIBOM the AI system user possess the technical tools to do detailed investigation and quires on the system descriptions and security qualities

Benefits

Security

The trustworthiness (or trustability) of the AI system improves through strong interoperable foundations.

Supply Chain Transparency

End users can see in detail where the system comes from, allowing them to make informed decisions on usage based on relative trust.

IP and Licensing

Transparency and respectability of licensing and IP terms across the value chain increases, protecting both end users, data owners, and system developers.

TAIBOM Community

TAIBOM has been seed funded from the UKRI Technology Missions Fund. It is now a standalone working group under Techworks (Deep Tech trade body).

Its founder members include NquiringMinds, Copper Horse, Techworks, BSI and BAE. It is now a fully open body accepting new members.

Get Involved

Email: info@techworks.org.uk to get involved